Getting Started

On this section we will take a quick tour through the key concepts needed to make your first query/mutation to the API.

Sections included in this article:

Overview

ShipHero’s public API lives in https://public-api.shiphero.com, and there are two main endpoints:

https://public-api.shiphero.com/auth(used for getting tokens)https://public-api.shiphero.com/graphql(used for fetching and modifying your data)

In order to make requests, you will first need to get a token that validates your identity. You get a token by authenticating with your user credentials. Once you have it, every request made to the API has to include it as part of the Authorization header as Bearer <your token>.

Note

API keys are no longer needed for the new API, just using your credentials to get the token is enough to start making requests. If you would like to have a special or unique user for the public API, you can always create one and use it to get the tokens and make requests.

Authentication

Authenticated requests are made with a JWT bearer token. To generate them you will have to provide your user credentials:

curl -X POST -H "Content-Type: application/json" -d

'{ "username": "YOUR EMAIL",

"password": "YOUR PASSWORD"

}'

"https://public-api.shiphero.com/auth/token"The response should look something like this:

{ "access_token": "eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsImtpZCI6IlJUQXlOVU13T0R

rd09ETXhSVVZDUXpBNU5rSkVOVVUxUmtNeU1URTRNMEkzTWpnd05ERkdNdyJ9.aktgc3MiOiJodHRwc

zovL3NoaXBoZXJvLmF1dGgwLmNvbS8iLCJzdWIiOiJhdXRoMHw1YmI3YTI4MjY4YTU2YzRjNTEzMTIx

MWIiLCJhdWQiOiJzaGlwaGVyby1wdWJsaWMtYXBpIiwiaWF0IjoxNTU0OTEwODc0LCJleHAiOjE1NTc

zMzAwNzQsImF6cCI6Im10Y2J3cUkycjYxM0RjT04zOAMRYUhMcVF6UTRka2huIiwic2NvcGUiOiJlbW

FpbCBwcm9maWxlIG9mZmxpbmVfYWNjZXNzIiwiZ3R5IjoicGFzc3dvcmQifQ.lW2UalihR5msHKhJzD

Pvy5SCKxSPyUCMuQ7RXyP2ZNQ2gENjGF2nmdsYlF2CqxH_wITcK10CproQErMK_yAWUSEck8qfC1Fu_

UNc9-xW55ALeCk09ZZD--aB_QFjLVM-ooawby7y4Ysf8H4yEBQpoPwZoQ3DQnu5QBNxd5oOLIP2ezzN

Yvrwjpm-uNN8II5sK9U075Mx1HH31KG14iFt5sEZQmYOz-oSWweVuY6Sd61VFD02sncXOmEZIxu3bda

ZSn1JYaM-ilLce4s748iv75BVDgqj1b2A1lyITeqvFoYWl3PKV56fOlfm8v9QnkSqR0iTGENgV6zZq3

rPRsBLTw", "expires_in": 2419200, "refresh_token": "cBWV3BROyQn_TMxETqr7ALQBaoF

gIzkC-8KkJaIq2HmK_", "scope": "openid profile offline_access",

"token_type": "Bearer" }You should save the access_token along with the refresh_token. The first is what you will use as a bearer token on any requests made to the graphQL API.

The Access Token will expire every 28 days, but you don’t need the credentials to re-generate them, you can always refresh them (as long as you saved the refresh token). Keep in mind that refresh tokens should be kept in a safe place, as it will allow anyone in possession to generate tokens that will grant them access to your data.

To refresh a token:

curl -X POST -H "Content-Type: application/json" -d

'{ "refresh_token": "YOUR REFRESH TOKEN" }'

"https://public-api.shiphero.com/auth/refresh"The response should look something like this:

{ "access_token": "eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsImtpZCI6IlJUQXlOVU13T0Rrd

09ETXhSVVZDUXpBNU5rSkVOVVUxUmtNeU1URTRNMEkzTWpnd05ERkdNdyJ9.aktgc3MiOiJodHRwczov

L3NoaXBoZXJvLmF1dGgwLmNvbS8iLCJzdWIiOiJhdXRoMHw1YmI3YTI4MjY4YTU2YzRjNTEzMTIxMWIi

LCJhdWQiOiJzaGlwaGVyby1wdWJsaWMtYXBpIiwiaWF0IjoxNTU0OTEwODc0LCJleHAiOjE1NTczMzAw

NzQsImF6cCI6Im10Y2J3cUkycjYxM0RjT04zOAMRYUhMcVF6UTRka2huIiwic2NvcGUiOiJlbWFpbCBw

cm9maWxlIG9mZmxpbmVfYWNjZXNzIiwiZ3R5IjoicGFzc3dvcmQifQ.lW2UalihR5msHKhJzDPvy5SCK

xSPyUCMuQ7RXyP2ZNQ2gENjGF2nmdsYlF2CqxH_wITcK10CproQErMK_yAWUSEck8qfC1Fu_UNc9-xW5

5ALeCk09ZZD--aB_QFjLVM-ooawby7y4Ysf8H4yEBQpoPwZoQ3DQnu5QBNxd5oOLIP2ezzNYvrwjpm-u

NN8II5sK9U075Mx1HH31KG14iFt5sEZQmYOz-oSWweVuY6Sd61VFD02sncXOmEZIxu3bdaZSn1JYaM-i

lLce4s748iv75BVDgqj1b2A1lyITeqvFoYWl3PKV56fOlfm8v9QnkSqR0iTGENgV6zZq3rPRsBLTw",

"expires_in": 2419200, "scope": "openid profile offline_access", "token_type":

"Bearer" }You should replace the previous access_token with this one for any further requests to the API.

Adding a Third-Party Developer

For Third-Party Developers, asking the client to use their ShipHero username and password is not ideal or safe.

However, there is another way of proving Third-Party Developers with the Bearer Token and the Refresh Token so they can use it without using the client’s ShipHero credentials.

You can do this by going to https://app.shiphero.com/dashboard/users and clicking on +Add Third-Party Developer button.

After adding the developer you should be able to see the Bearer Token and the Refresh Token . These tokens should be provided to the developer:

Note

Always communicate secrets in a secure way.

Schema & Docs

The API is built with GraphQL.

One of the greatest benefits of GraphQL is its self-documenting nature, which allows the users of the API to be able to navigate through the schema, queries, mutations, and types so you know exactly what can be requested, which parameters to pass and what to expect in return.

You can check an extract of the Schema here. We keep this up to date with every update to the API

There are also several Client IDEs that can be used to interact with the API and navigate it, the most used ones are:

Note

We recommend always using the desktop versions or the extensions of these Client IDEs. Web versions usually have connection errors.

Using your Token and pointing to https://public-api.shiphero.com/graphql you can access both Schema and Docs.

With GraphQL Playground or Altair for example:

Note

You don’t have to make a query to access Schema and Docs.

Queries & Mutations

As in any GraphQL API, you will have queries and mutations, and in both of them, the result will always be an object. In GraphQL, queries or even mutations can return any type of object, even Connection Fields (which are the way to have paginated results), but we have made a design decision to make every operation return a BaseResponse object. This object allows us to include extra information or metadata to the responses, without polluting the resource types (more on this later).

Queries

When making queries there are two extra parameters that can be defined: sort and analyze.

sortwill be applicable only when requesting queries that return multiple results (Connection Fields). Here you can specify a comma-separated list of attributes to sort the results. The default is to sort by ascending, but you can specify different criteria on each field, by pre-pending (+ or -). Ex: sort: “name, -price”analyzeis a boolean flag and will only compute the complexity of the query, without executing it (For more info check the Rate Limiting section)

The BaseResponse object returned on every query will always have the following fields:

request_id:An unique request identifiercomplexity:The complexity of the querydata:The actual results of the query (a Field, List or Connection Field)

Every connection field can be passed the common relay parameters to specify the amount of results to get. If first and last are not passed, a default max of 100 will be applied. You should consider this since the most results you request, the higher the complexity of the query and so more credits from your quota will be consumed.

Example getting all products

query {

products {

complexity

request_id

data(first: 10) {

edges {

node {

id

sku

name

warehouse_products {

id

warehouse_id

on_hand

}

}

}

}

}

}Example getting a product by SKU

query {

product(sku: "some-sku") {

complexity

request_id

data{

id

sku

name

warehouse_products {

id

warehouse_id

on_hand

}

}

}

}Mutations

For mutations, the same rules apply, but in this case, the result is not called data, it’s defined on each mutation, so usually if you create a product, you will have a product field in the response.

Example creating a product

mutation {

product_create(data: {

name: "New Product"

sku: "P0001"

price: "10.00"

value: "2.00"

barcode: "000001"

warehouse_products: [

{ warehouse_id: "V2FyZWhvdXNlOjExNA==" on_hand: 5 },

{ warehouse_id: "V2FyZWhvdXNlOjEyODg=" on_hand: 15 }

]

}) {

request_id

complexity

product {

id

name

sku

warehouse_products {

id

}

}

}

}Note

For more examples on Queries and Mutations you can also visit: Examples

Throttling & Quotas

Opening an API to the public means many things can go wrong, some users might abuse it and some will play trial and error. Given the dynamic nature of GraphQL, users are responsible for making the queries, and intentionally or not they can come up with extremely expensive queries. In order to prevent those from happening and affecting the entire performance of the API, some measures have to be taken. That’s why we have implemented a rate-limiting based on user quotas.

Each operation performed by the public API has a calculated complexity, representing the cost of executing that particular operation. Following this, users will start with an initial amount of 4004 credits, and every second, 60 credits are restored. How many operations you can execute will depend on how you build them. The same query can have a different complexity if you decide to fetch a lot of information from the results, or if you navigate across relationships to enrich the results. No operation can exceed 4004 credits.

Given the dynamic nature of GraphQL operations it might be hard to imagine the cost of an operation, so here’s where the analyze parameter available in queries becomes relevant. If you send analyze: true the query won’t be executed, but instead, only the complexity will be calculated. You can then retrieve the cost of that query by accessing the complexity field from the response.

The main concepts that make the throttling strategy are the following:

- Customers are given 4004 credits shared across all users.

- Every second, 60 credits are restored (this is the increment_rate).

- No operation can exceed 4004 credits.

Per account credit limits will be strictly enforced, so please keep this in mind when using the public API.

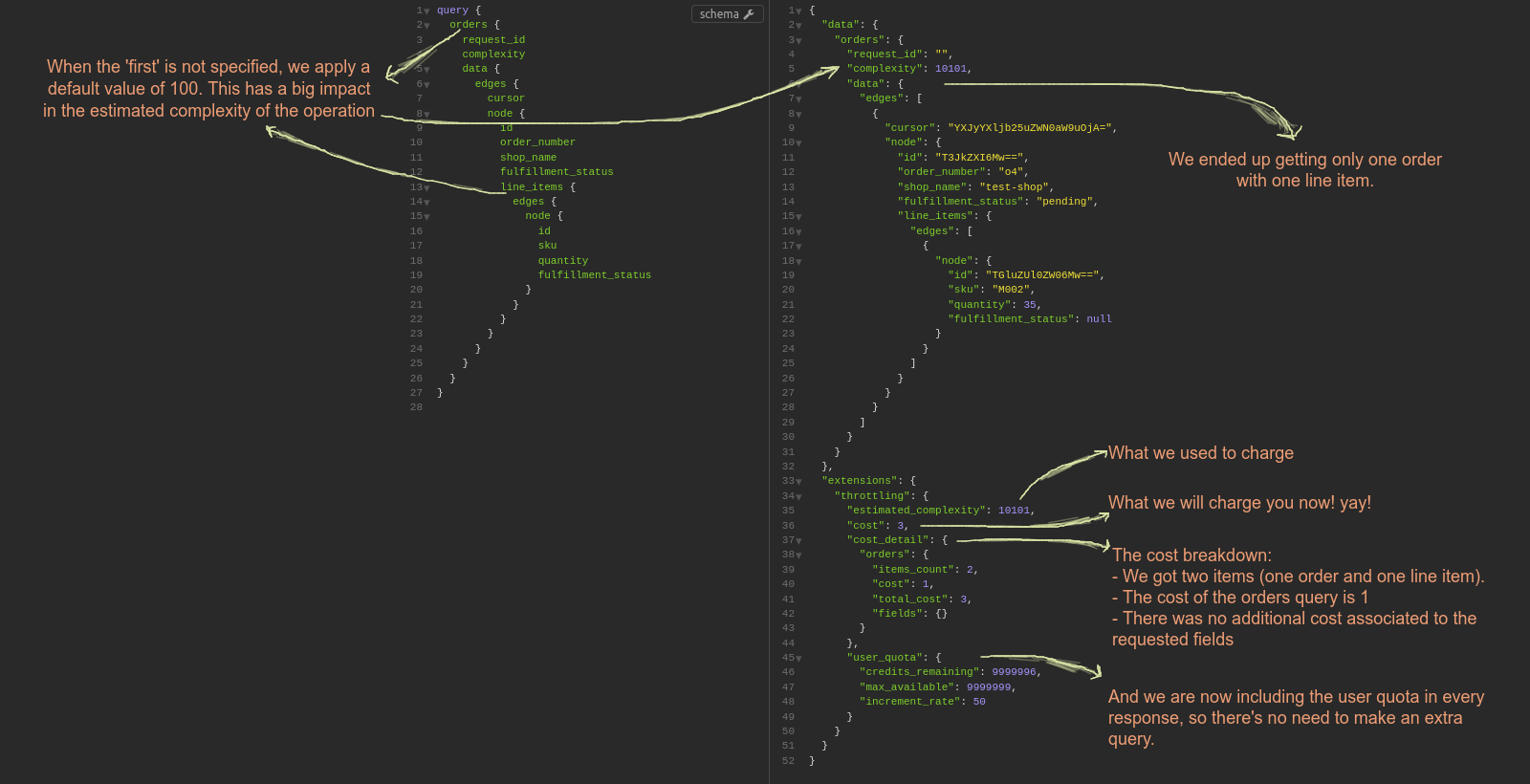

How we charge for credits

We analyze the query, compare the expected cost based on the number of results requested to the actual cost of the returned query, and credit you pool back with the difference.

For every request, we calculate an estimated cost based on the operation requested. The estimation is greatly affected by the relay parameters used in connection fields. When no first value is defined, we always assume a default of 100.

You will be charged for what you get. If you asked for a 100 but got 5, we will only charge you those 5. Let’s see it with an example:

A common question has been:

How do we iterate order line items without asking for too much (thus producing a more expensive query) but not too little (having to make another query to get the remaining line items)

Well, no need to worry about that anymore. Just ask for what you think you should get. If you get less, you won’t be charged. This means you don’t need to worry too much about optimizing the first parameter. It’s better to ask for 50 and get 30 than asking for 25 and needing to make an extra query to fetch the remaining 5.

Note

Although we return credits not spent, we still require that calls don’t exceed the maximum allowed per account. This means that if your account has an upper limit of 4004 credits, the query structure should not require more than 4004 credits. You can use the analyze argument present in all our queries to check this before making the call.

Warning

Aside from the quota, ShipHero strictly enforces a policy of 7000 max requests during the last 5 minutes. Going over that threshold will trigger a “429 Too Many Requests” in your subsequent requests until you drop below it again.

This also includes requests that returned an error and IntrospectionQuery requests.

What about specific field costs?

Some of our schema fields have a cost associated with them. Now, whenever you request one of those fields, you will see the cost and the impact it has on the overall cost of your request.

Analyzing query

In this case, the result won’t include any products, the query won’t be executed, it will only be analyzed to calculate its complexity, giving the user the possibility to know beforehand how many credits it will consume.

query {

products(analyze: true) {

complexity

request_id

data(first: 10) {

edges {

node {

id

sku

name

warehouse_products {

id

warehouse_id

on_hand

}

}

}

}

}

}and the output would look like this:

{

"data": {

"products": {

"complexity": 101,

"request_id": "5cea345gsn87a",

"data": {

"edges": []

}

}

}

}Throttling error

When you request an operation that exceeds your user quota you will receive an error like this one:

{

"errors": [

{

"code": 30,

"message": "There are not enough credits to perfom the requested operation, which requires 101 credits, but the are only 55 left. In 4 seconds you will have enough credits to perform the operation",

"operation": "inventory_changes",

"request_id": "5da7dc13f3079f0def208711",

"required_credits": 101,

"remaining_credits": 55,

"time_remaining": "4 seconds"

}

],

"data": {

"inventory_changes": null

}

}Note

A really useful way of not running out avoiding throttling errors is by using the “time_remaining” variable that gets returned from a query/mutation and extract the number from it.

Then, by using some sort of wait() or sleep() call you could make the script wait until credits are restored back. If you do that, the credits essentially become limitless, and your query can run until you get all required results.

User Quotas

You get the status of your user quota in every response.

After a few operations, you might want to know how many credits you have available and for how long. That’s exactly what the user_quota query will provide you:

query{

user_quota{

credits_remaining

max_available

increment_rate

}

}Optimizing a Query

Usually, if a query has connections, it means that it will consume a large number of credits.

In these cases, best practice is to include the first or last argument, specifying the number of items you want to be returned (this is equivalent to limit).

By doing this, you’ll be able to optimize your Query and consume fewer credits.

For example, let’s suppose we use the Orders Query to get orders and it’s line_items, but we don’t include first or last on the connections.

The Query should be something like this:

query {

orders {

request_id

complexity

data {

edges {

node {

id

order_number

line_items {

edges {

node {

id

sku

quantity

product_name

}

}

}

}

}

}

}

}And the response will be an error like this:

{

"errors": [

{

"code": 30,

"message": "There are not enough credits to perfom the requested operation, which requires 10001 credits, but the are only 5000 credits left. You can execute queries up to that level of credits or wait 55 minutes until your quota is refreshed",

"operation": "orders",

"request_id": "5d654ee06c22facb34d95769",

"required_credits": 10001,

"remaining_credits": 1001,

"time_remaining": "55 minutes"

}

],

"data": {

"orders": null

}

}Which means we don’t have the necessary credits for this Query

Important

The reason is the following:

- The cost of a single operation is 1

- If we don’t include

FirstorLastit assumes a maximum quantity of 100ordersand 100line_itemseach order.

So total credits estimated are: 1 + 100*100 = 10001

Instead, if we narrow the Query down to the first 10 orders and its 10 line_items, the Query should look like this:

query {

orders {

request_id

complexity

data (first:10) {

edges {

node {

id

order_number

line_items(first:10) {

edges {

node {

id

sku

quantity

product_name

}

}

}

}

}

}

}

}Which means that total credits estimated are: 1 + 10*10 = 101

Note

hasNextPage can be included on the Query under pageInfo field. This will help verify if more queries are needed to get the rest of the data.